Data access control: quixotic enterprise initiative, or the top priority of a startup’s first data hire?

That’s the question I had to ask a year ago as I started pulling together the analytics stack for Immuta — the “automated data access control company.” Between all the standard engineering, analytics, and organizational work, I also faced pressure to “dogfood” our own access control product.

It’s been one of the best decisions I’ve made as a data platform owner.

Thinking rigorously about access control from the start has forced my team to prioritize developing rigorous processes around metadata management, since access control is one of the original metadata integration problems. Far from a futile exercise, asking pointed questions about what data must be protected — and why — has made our data team at Immuta more proactive. The practice has set us up to scale access control organically with the company, rather than at fits and starts.

In this post, I’ll share some of the conversations we had in developing our data access policy, and how a focus on metadata integration impacted tool selection. In future posts, we’ll dig into specific tools in our stack, such as Snowflake, dbt, and Looker, and how they integrate with the Immuta policy engine.

Building for access control and governance

The first data hire has pressure in two directions: make business impact quickly and build with discipline. If you already have executive buy-in, it may be easier to build out your dream architecture right away. At Immuta, the development was iterative, but we were quick to adopt the “modern data stack”: a cloud data warehouse supported by data replication, transform, and business intelligence tools. (The benefits for small teams have been exhaustively described elsewhere on the internet, so I won’t go into them here.)

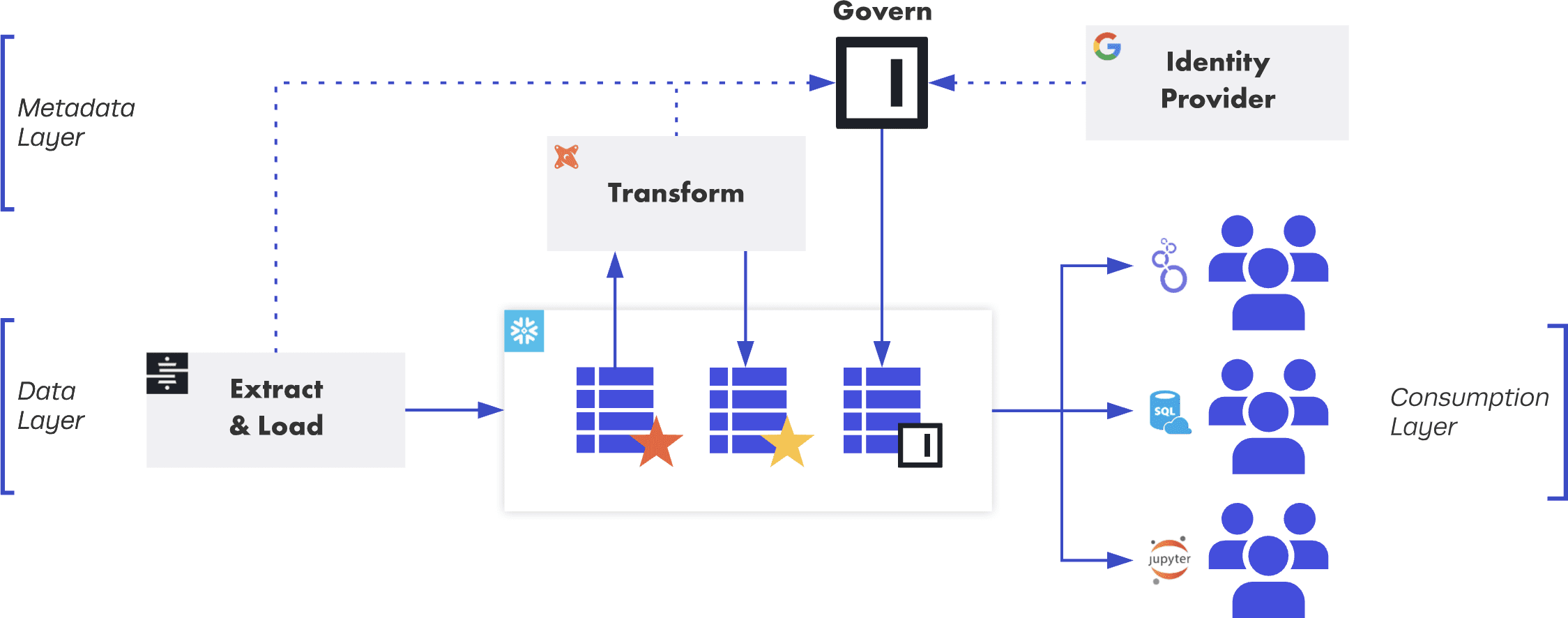

The “modern data stack” with an Immuta access control layer.

I mention discipline here, because it is easy to get lost in the frenzy of building architecture and forget that, in a fast-growing company, processes are going to change in the future. Furthermore, you will later be hiring people who are smarter and better than you in each area of the data stack. If the work you do is sloppy, you’ll be slowing down yourself and these future hires. In a sense, building your architecture is your first data access control initiative because you will enable or limit all future efforts based on what the tools make available.

In my view, modern cloud data access control is the purposeful management and application of metadata. Data quality, observability, discoverability, security — all of these initiatives will fail without a consistent and deliberate approach to managing metadata. Architecturally, then, one of the most important decisions we have made is to ensure that each step in our pipeline generates metadata that can be harvested and used later on.

Access control as the first governance problem

Access control is the first, and least enjoyed, of the metadata problems a team must solve, but there is much more to it than is generally appreciated. Data access control is an optimization problem: how can you give maximum access, with the required restrictions, with the least overhead?

Most teams will start small and follow a set of best practices for implementing role-based access control (this is a helpful read for new data teams). These are great first-pass efforts and will thrive when the data warehouse has little “truly sensitive” data.

But let’s imagine that you are planning for massive growth in both data sources and user base. You can either kick the can a bit further every month — or you can build for scale. From this perspective, access control becomes a sexy data management problem with two objectives:

- Objective 1: Data should be as accessible as possible to all stakeholders since data products should help build consensus across departments, not become siloed on individual teams.

- Objective 2: Sensitive data should be protected by default since data that is confidential to the business, regulated, or related to specific people could create serious risks if disclosed.

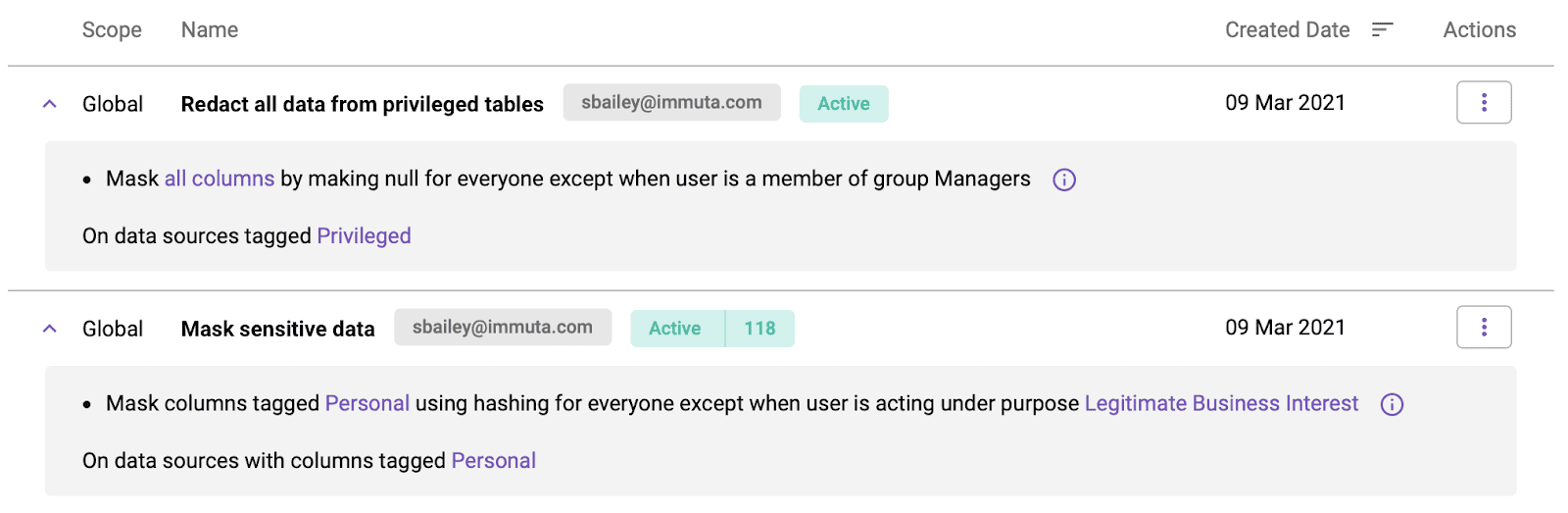

The most efficient way to implement these objectives is through a “safe harbor” approach: Apply a set of global, metadata-based rules to all your data that reduces risk to its highest acceptable level. Then, you can explicitly peel back these rules for groups with authorizations (for example, marketing could see individual lead names). In this way, all access to sensitive data is explicitly authorized. Putting the objectives above into practice might result in a human-readable policy like this:

- All employees may know the existence of all tables and issue queries against them, but the results will be altered according to our data policies.

- All columns containing personal information will be masked unless the user has received an authorization for using the data for a certain purpose.

- All tables containing confidential information will be entirely redacted unless the user has the appropriate authorization (group membership or user attribute).

Notice how we avoid talking about any specific data sources or user groups at this point. Human Resources data is an obvious example of sensitive data we must protect, but the actual policy is independent of that specific domain.

One policy, global scope

For nascent data teams, the value proposition of a metadata-driven access control is scalability. Abstracting the policy logic from the specifics of implementation (view definitions, roles) unlocks flexibility and makes the platform more evolvable over time, in much the same way as separating storage from compute, or extract and load from transform.

The big lift, in fact, is not writing the access policy in Immuta, which takes only a minute or so. The effort is spent in ensuring that the entire pipeline will be built in a way that is interoperable and can be updated at the speed of the data team’s pace. These technical challenges include:

- Pushing metadata from transformation or orchestration tools like dbt and Argo Workflows into Immuta’s metadata catalog to activate policies

- Synchronizing authentication systems for users to enable password-less access and personalized access to dashboards

- Creating “clean room” schemas for developers to enable creation of new data products in the databases

I’ll dig into over the next few posts as we explore how Immuta integrates with Snowflake, dbt, and Looker for Immuta’s internal data stack.

Conclusion

Data access control is not at the top of the list for new data hires. But as the data community collectively begins to solve the challenges related to discoverability, quality, lineage, observability — and, yes, cloud data access control — I believe we’re going to find that being purposeful with metadata is the common theme. In that mindset, using policy and metadata to drive access control becomes not a surprising priority for new teams, but an obvious place to start the journey.