Lifting and shifting data science operations to the cloud can be expensive and risky. From the various access controls that need to be written and applied to the federal and industry regulations that have to be satisfied — on top of any contractual agreement terms — compliance is a significant cloud migration challenge, and few platforms exist that provide all-in-one cloud data access control.

This leaves data engineers and architects in a precarious situation: The sooner data is on the cloud and accessible to users, the sooner it can be leveraged for business-driving analytics. However, a lack of clarity about regulations and data consumer limitations that need to be applied across data sources, as well as how to apply them consistently, introduces the potential for misuse or unauthorized access, making data engineers and architects personally liable for unintended exposure.

But it doesn’t have to be that way.

The key to data policy enforcement across all of your enterprise data while saving more than 60% of cloud infrastructure costs is at your fingertips with a secure cloud migration solution.

Separating Compute and Storage

Modern cloud platform solutions and some on-premises data centers separate compute from storage, making data more available and systems more scalable. This increasingly-prevalent concept allows persistent data to sit at rest in storage (think Amazon Web Services S3, Azure Data Lake, Google Cloud Storage, etc.), so compute servers are only spun up to transiently process that externally stored data when specific computations are needed. In addition to increasing data availability and scalability, separating compute and storage saves significant infrastructure costs by eliminating the need for a Hadoop instance to exist in perpetuity.

Separation of compute from storage is the new normal for cloud data platforms, which is important to keep in mind because as storage and computing needs scale independently, access controls may no longer work as expected. This is often an unanticipated cloud migration challenge. When migrating sensitive data to the cloud, having a data access control tool like Immuta, with built-in Database/Hadoop/Spark data privacy controls, will make it significantly easier to support separation of compute and storage for batch workloads. As more platforms migrate sensitive data to the cloud, this function will become increasingly important for data teams.

Enforcing Data Privacy Policies — Without Anti-Patterns

We know separating compute and storage in a cloud migration can thwart the effectiveness of access controls that aren’t built for a cloud environment. That’s why, when migrating sensitive data to the cloud, enforcing dynamic data privacy security controls is a critical task for data engineering and operations teams — but it isn’t always an easy one without the right tools.

For instance, HIPAA enforcement requires masking of certain Personally Identifiable Information (PII), and GDPR enforcement stipulates where rows of data may need to be redacted based on the user’s operating country. How are data engineers supposed to anticipate all the nuances of these regulations and enforce policies that allow the appropriate access to various levels of data consumers? Multiply this question by the volume of data coming from multiple sources in a cloud migration, and it’s easy to see how access controls can quickly become difficult to manage.

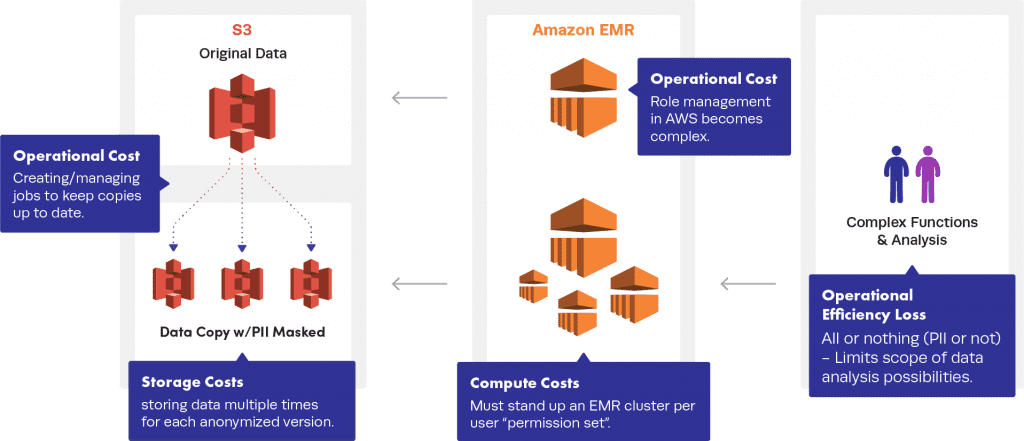

This is because data teams often resort to a common, yet flawed approach: creating pseudonymized or anonymized copies of data, then wrapping security controls around those copies. The problem with this is that making copies for the purpose of data privacy security controls is considered an anti-pattern — a seemingly good approach that’s actually counterproductive and just plain bad — for a number of reasons:

- Making copies costs storage dollars. Every unique combination of policy-to-user type will require a new copy of the data, which can quickly get out of hand.

- Writing and managing the code to create the copies is both time- and labor-intensive — another tax on your data teams. Additionally, codes can be difficult to update when policies change, adding significant costs in complexity and data teams’ work hours.

- Copies, which are static data snapshots, will always lag behind the raw data and limit analysis. Appending updates to copies often isn’t possible based on the privacy technique being used. Therefore, data engineers must re-pseudonymize/anonymize the entire source from scratch, costing time and resources.

- Compute workloads cannot be multi-tenant. This forces data teams to create compute clusters for each policy-enforcing copy of data to ensure the users don’t cross-contaminate data within the compute cluster. Consider AWS EMR instances as an example.

In short, copying data and adding security controls potentially removes any and all benefits of migrating to the cloud. Data teams can avoid these pitfalls when planning and executing a cloud migration by taking a more practical and dynamic approach to data policy enforcement.

A Practical Approach to Policy Enforcement

Let’s look at how this could play out for your team. On AWS, the anti-pattern mentioned above looks like:

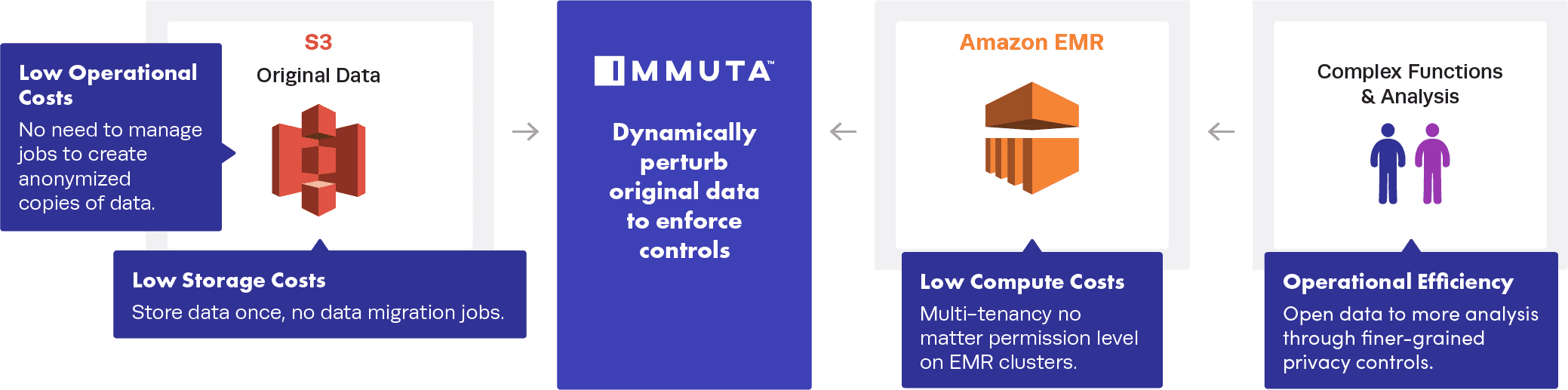

Immuta solves this problem by allowing just-in-time dynamic enforcement of data policies as your analytical jobs are computing. In other words, you only need a single live copy of the raw data. Additionally, Immuta manages the on-demand policies tied to the user and the data, making it possible to have a multi-tenancy on the cluster.

In practice, the AWS example from above would look like this with Immuta:

The benefits to this method are abundantly clear when operating in the cloud and managing workloads transiently to reduce cost. When migrating sensitive data to the cloud, avoiding copies and all-or-nothing data access controls will save you time and money, as well as securely accelerate speed to data access and analysis.

A Look at the Numbers

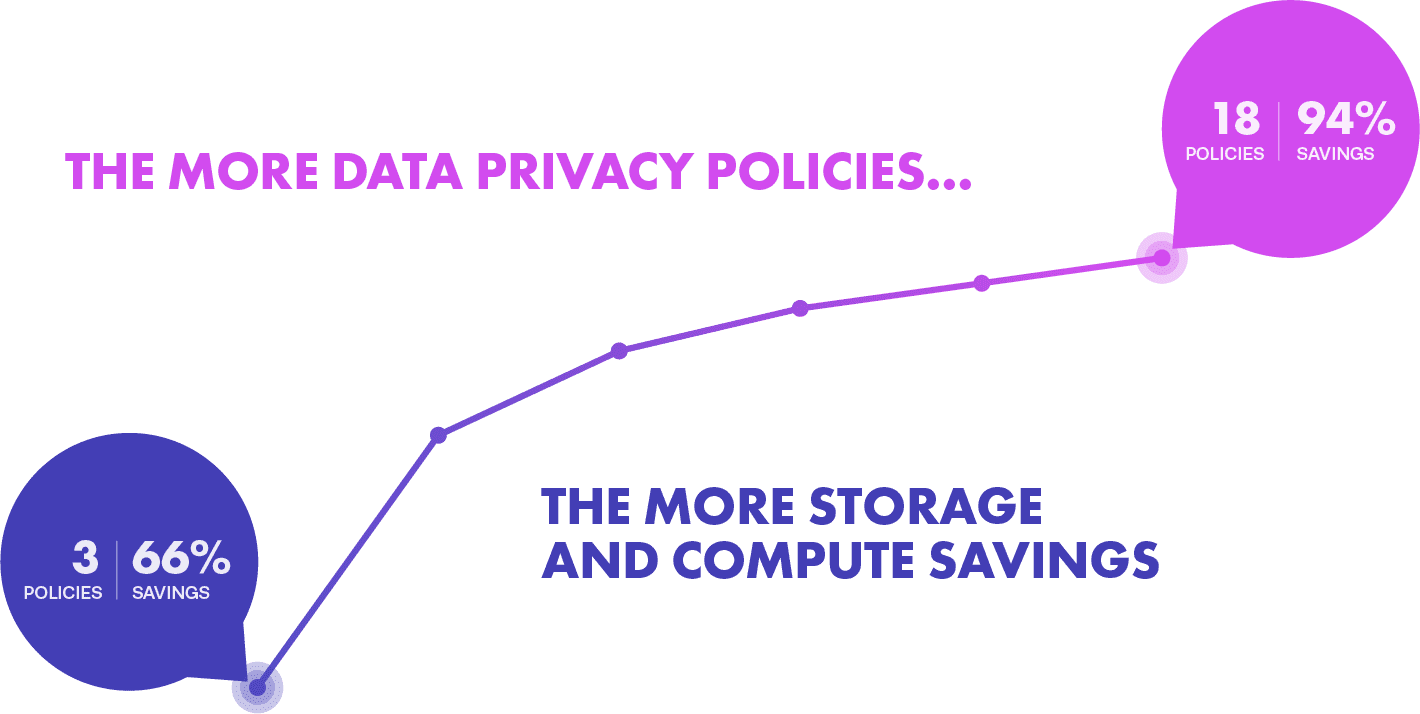

Now that we’ve mapped out the process, let’s see how the savings scale.

We’ll start with our variable: X. X = the amount of possible data policy combinations across the intersection of users and policies that need to be enforced. Immuta makes these policy combinations easy to manage and understand with a built-in policy authoring and rules engine. Once you have an estimate of X, you can work backwards to your actual infrastructure savings.

Constants:

- E = EMR instance costs

- S = S3 Storage costs

- The formula: (E+S) – ((E+S) / X)

Starting with only three policy combinations, you are saving 66% on compute and storage costs. Those savings grows as you increase the policy combinations required:

With Immuta enforcing the data policy controls dynamically, your storage cost remains static and your compute is tied completely to utilization rather than policies, regardless of the number of policy combinations. You’ll need more compute resources if your user count grows, regardless of multi-tenancy; however, transient EMR clusters are a fraction of the cost of storage.

The real magic of this approach is that cost and effort no longer have to be the primary decision factors for how you enforce data access controls. Organizations have historically defaulted to “no, you can’t touch that data,” or “you can only see this highly anonymized version,” because of the issues associated with the anti-pattern above. But with an automated data access control solution like Immuta, which seamlessly integrates with cloud data platforms and enables dynamic fine-grained access controls that save time, money, and redundancy, you can migrate to the cloud, access and use data faster, more efficiently, and more securely, all without increasing data teams’ workloads.

Learn more about protecting sensitive data when migrating to the cloud in Seven Steps for Migrating Sensitive Data to the Cloud: A Guide for Data Teams. Find out how to efficiently and securely migrate your data science operations to the cloud by starting a free trial of Immuta’s SaaS deployment option.