Permission to

share safely.

Power your data marketplace initiatives and data access governance projects with a unified platform that brings all your data assets and people together.

Power your data marketplace initiatives and data access governance projects with a unified platform that brings all your data assets and people together.

We are excited to announce a new suite of capabilities that make it easier to securely implement a data marketplace, so you can put data to work faster.

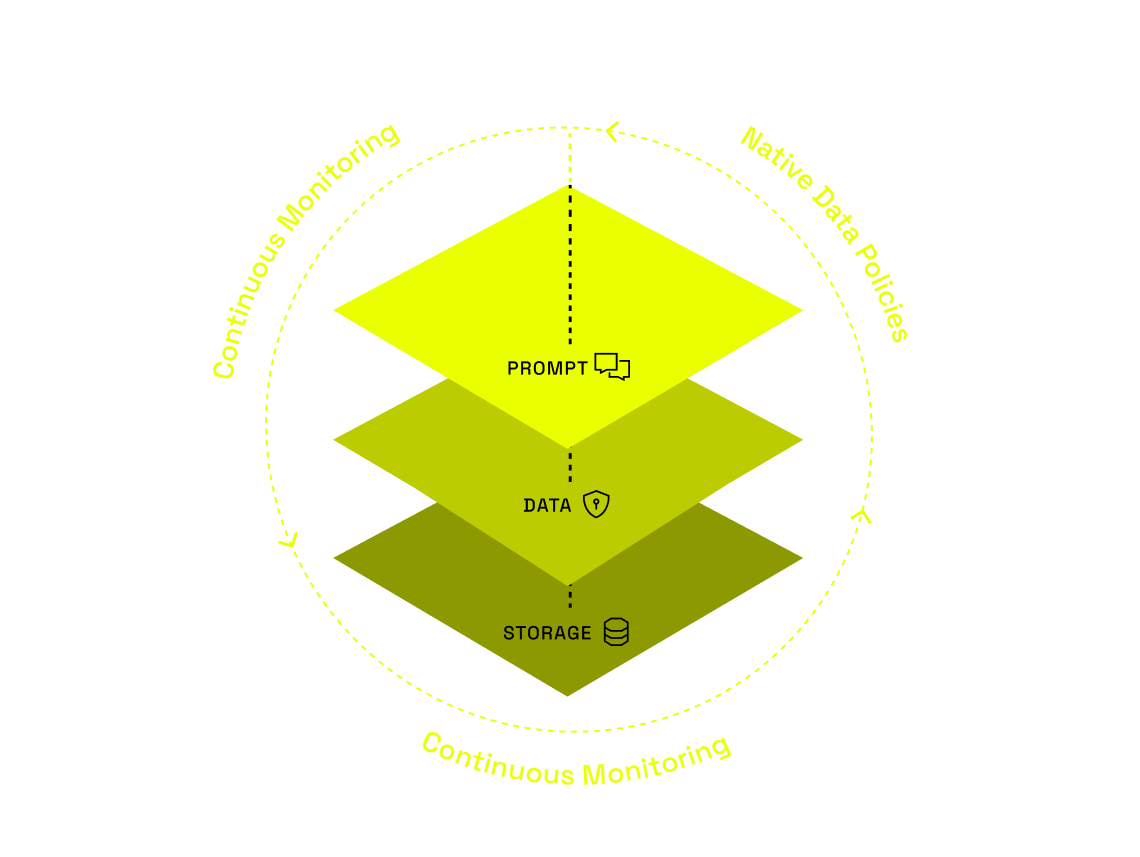

Give everyone fast, governed access to data with the built-in controls, collaboration workflows, automated provisioning, and continuous monitoring you need to keep risk low and compliance high.

Identify sensitive data, enable access, and automatically enforce policy on all of it — no matter where it lives.

Use intuitive workflows to allow data users, stewards, and governors to negotiate and automate data access and policies.

Scale fast access to data with native integrations across all supported compute and storage platforms.

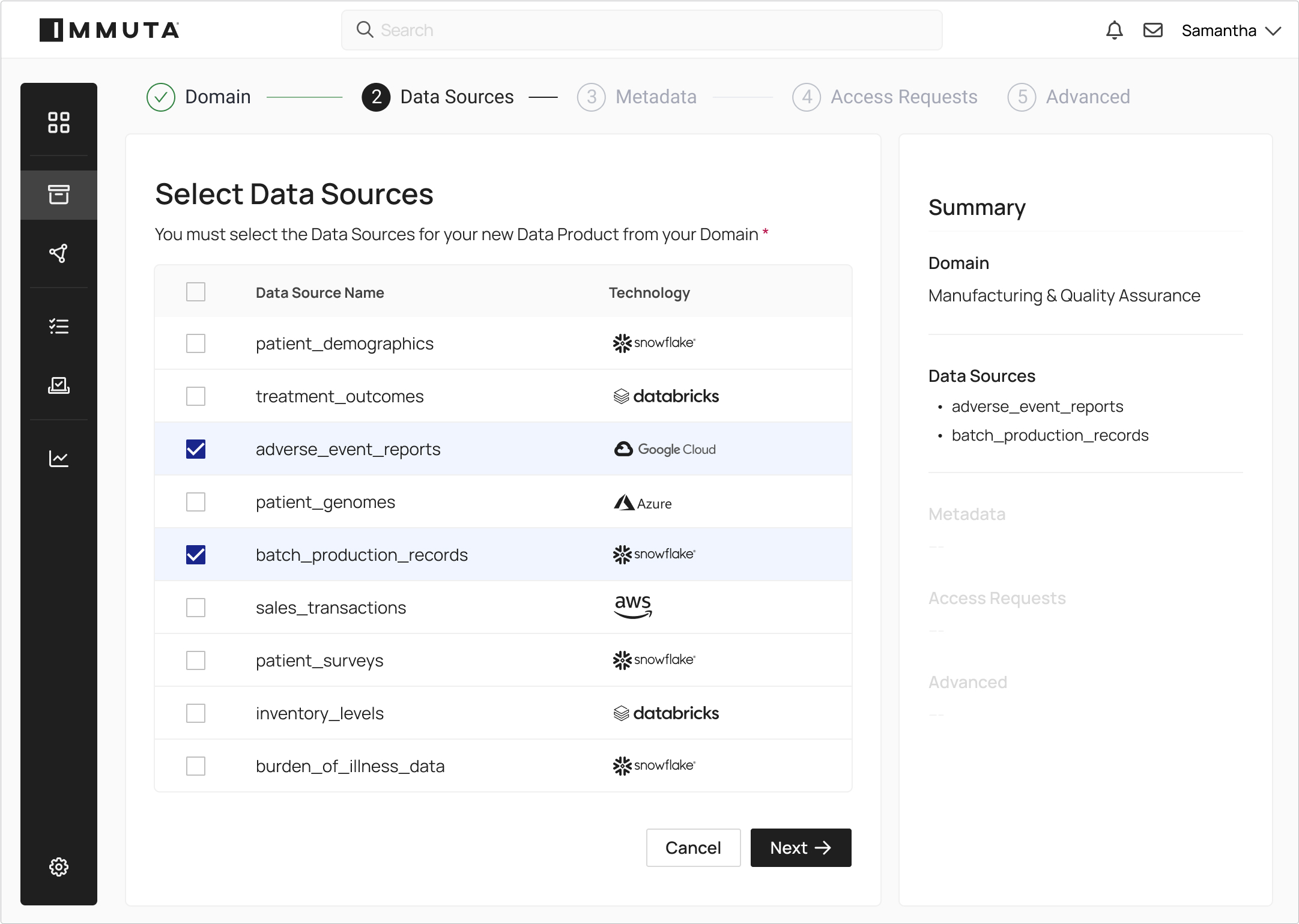

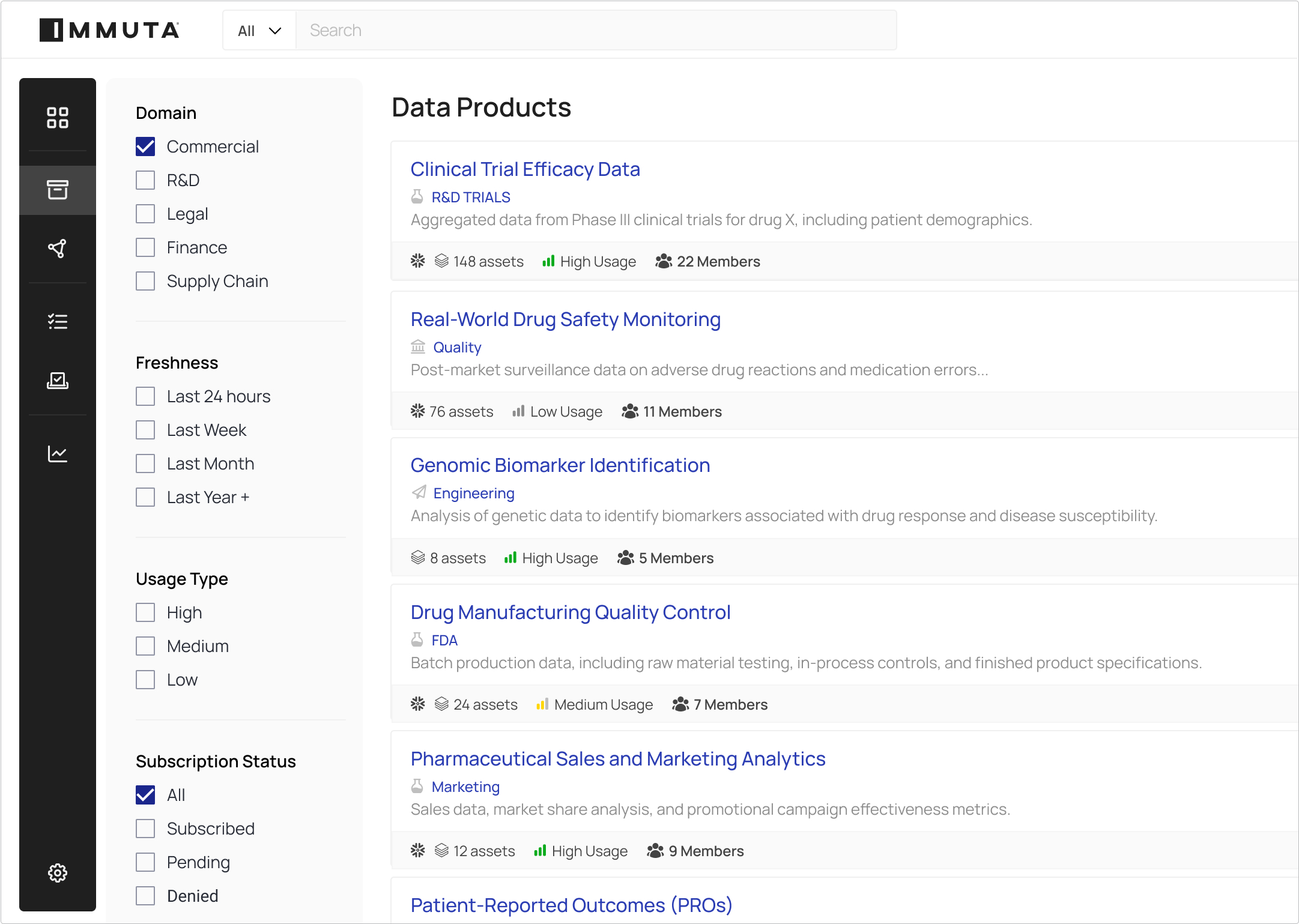

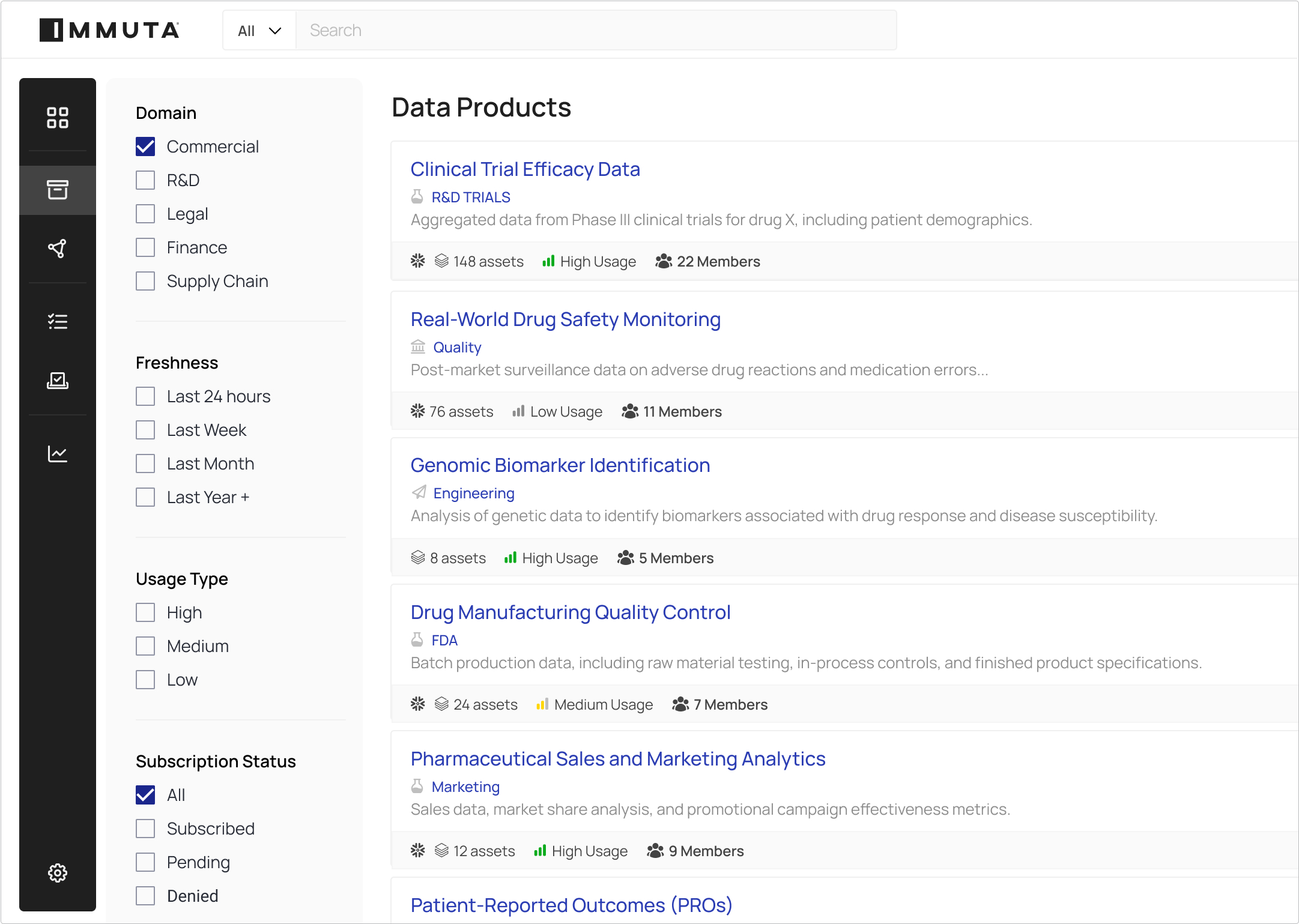

When you build a Data Marketplace with Immuta, you get a centralized hub that makes it easy for data producers to publish data assets, quick for users to find them, and simple for data stewards and governors to authorize access.

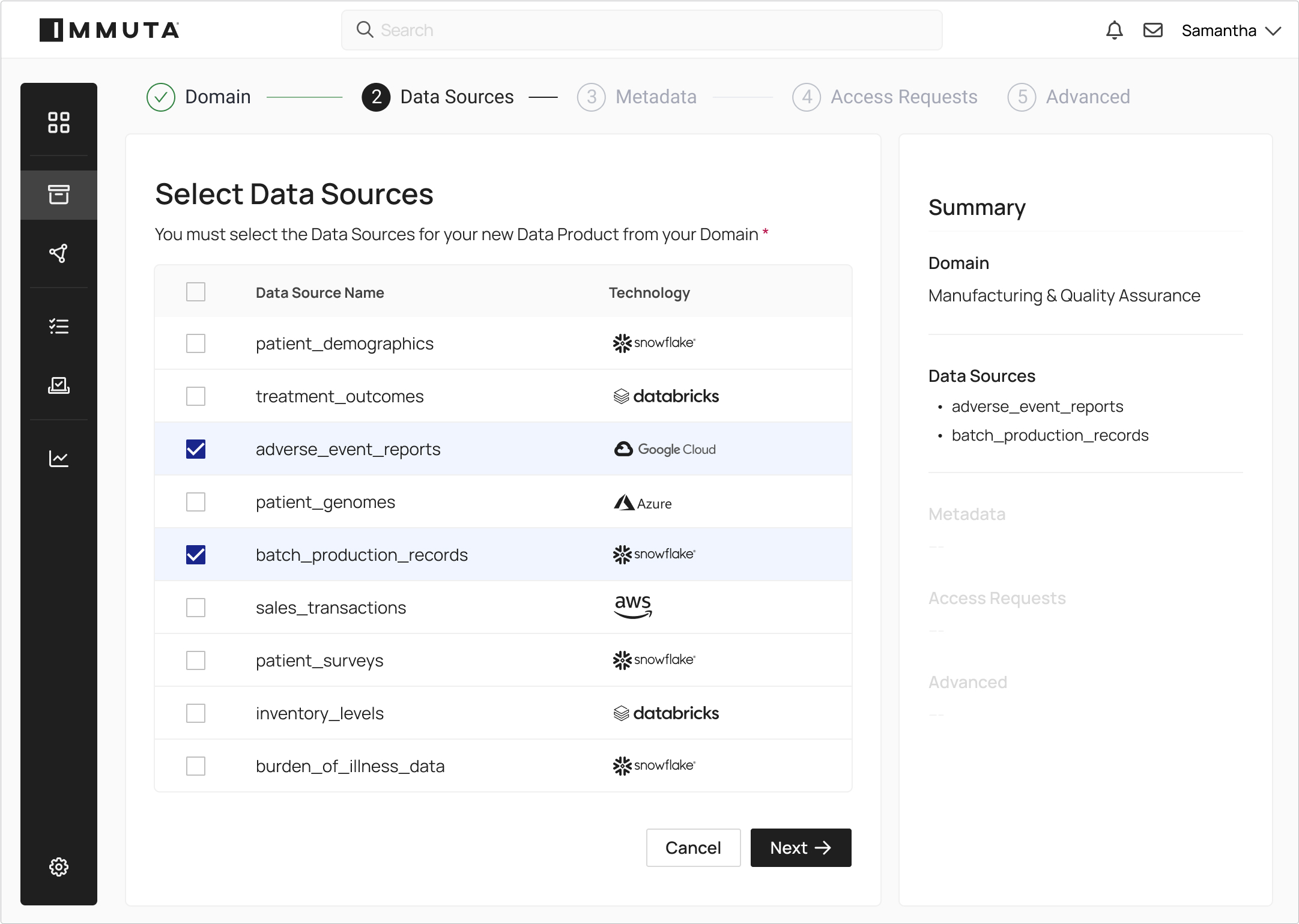

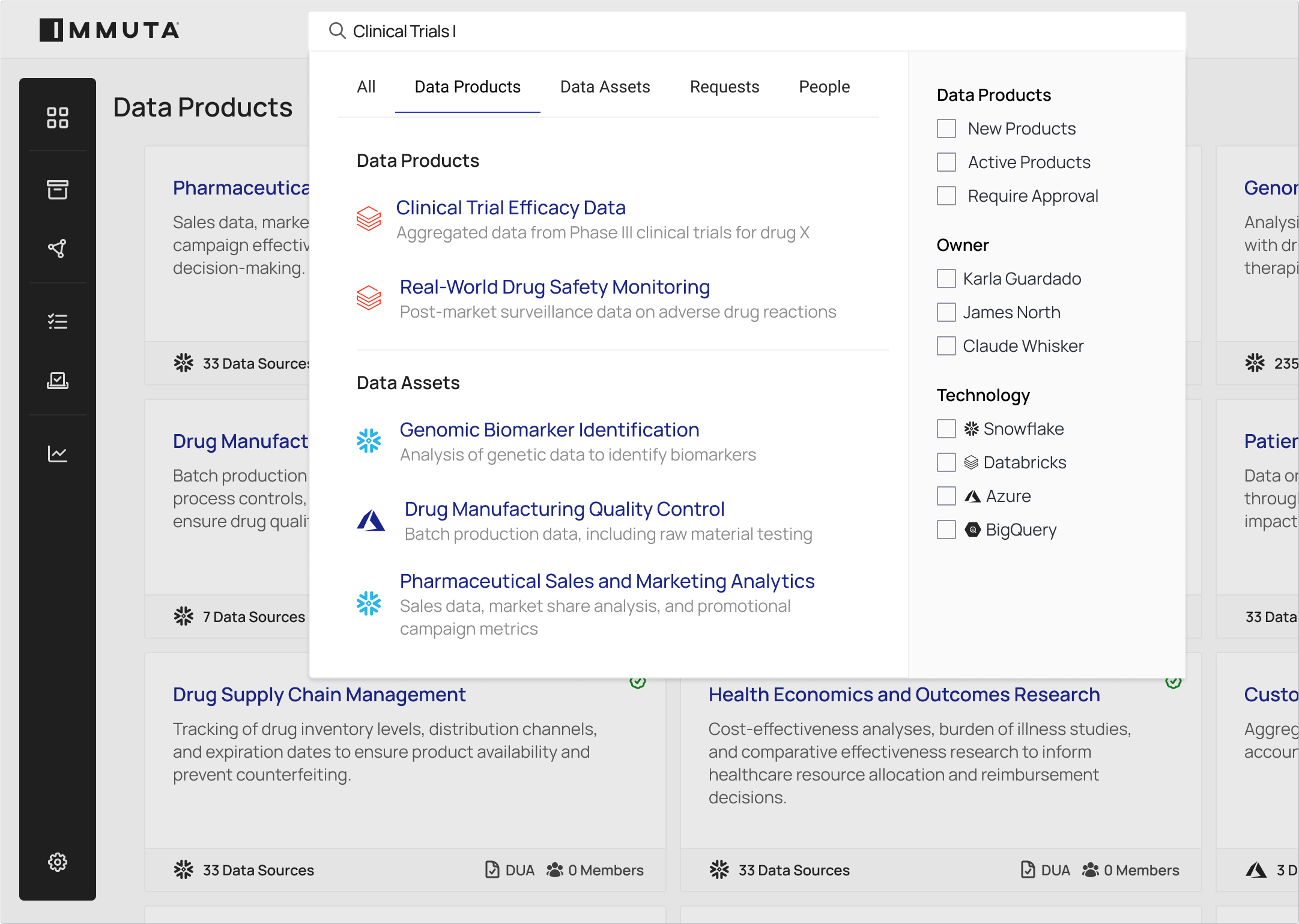

Make curated data products findable on a single, central platform.

Define logical domains for local control and visibility. Enable business users to manage metadata and access approvals separately from data product owners.

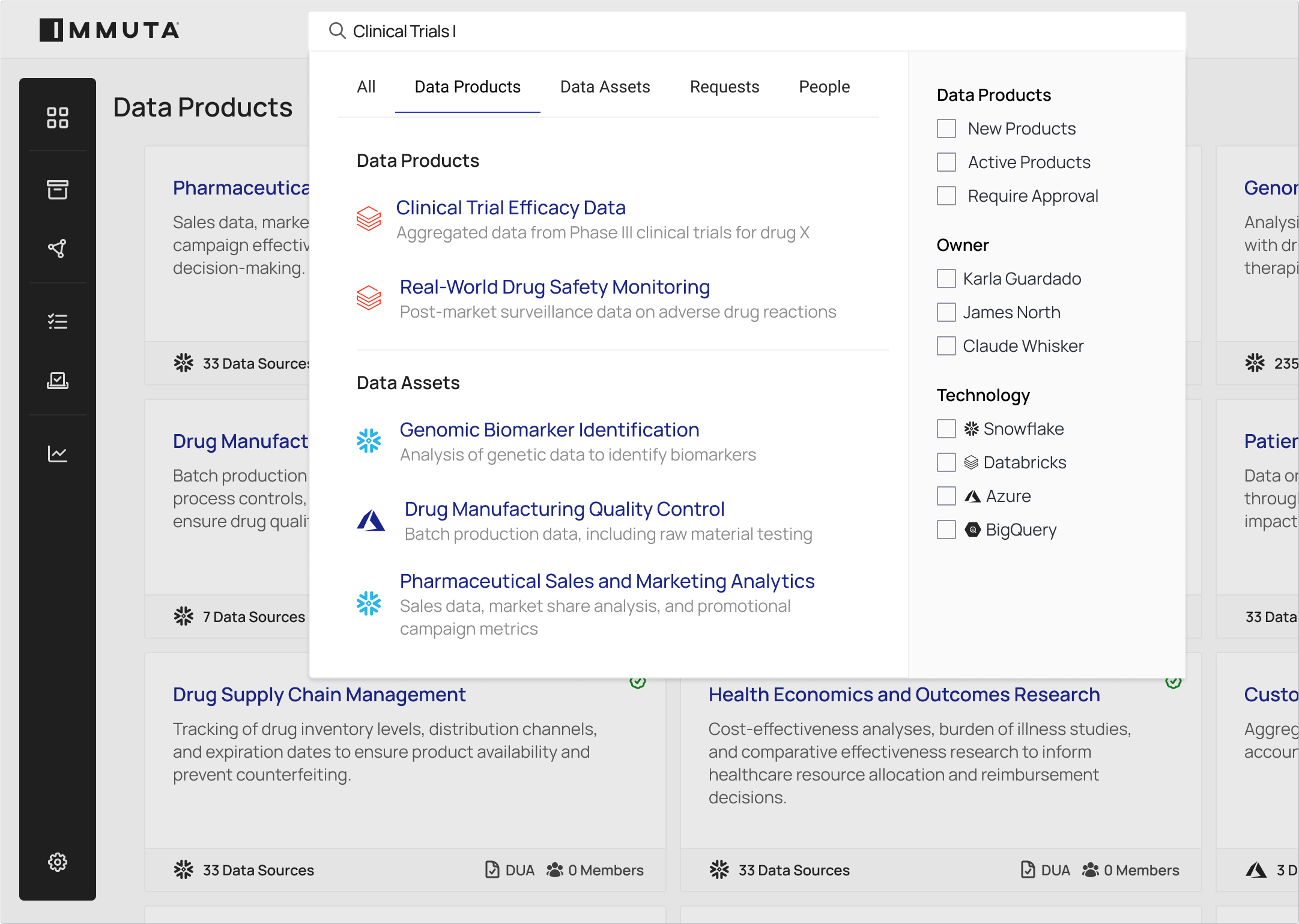

Make it easy for users to search and filter available data assets, and establish a process to request access.

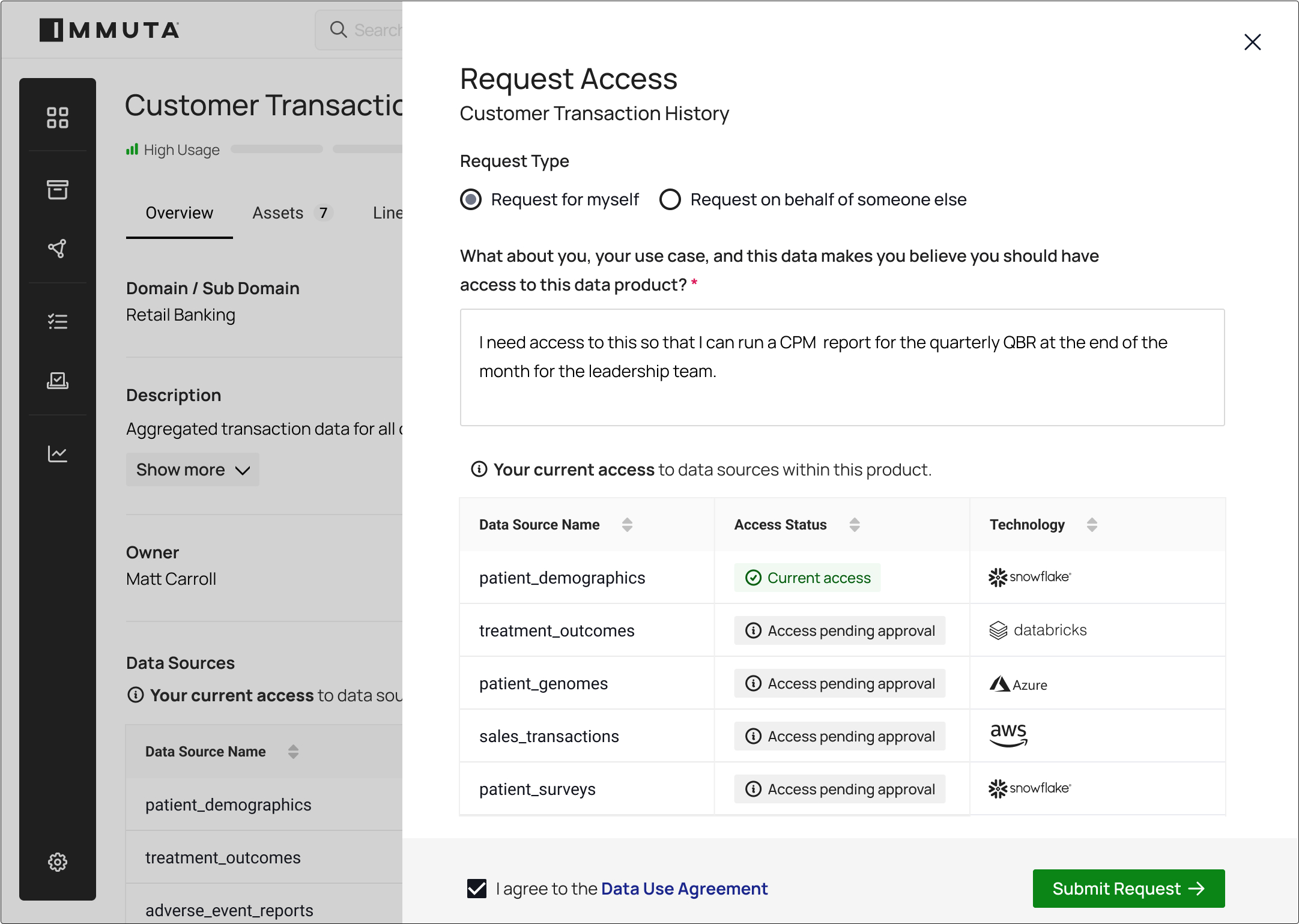

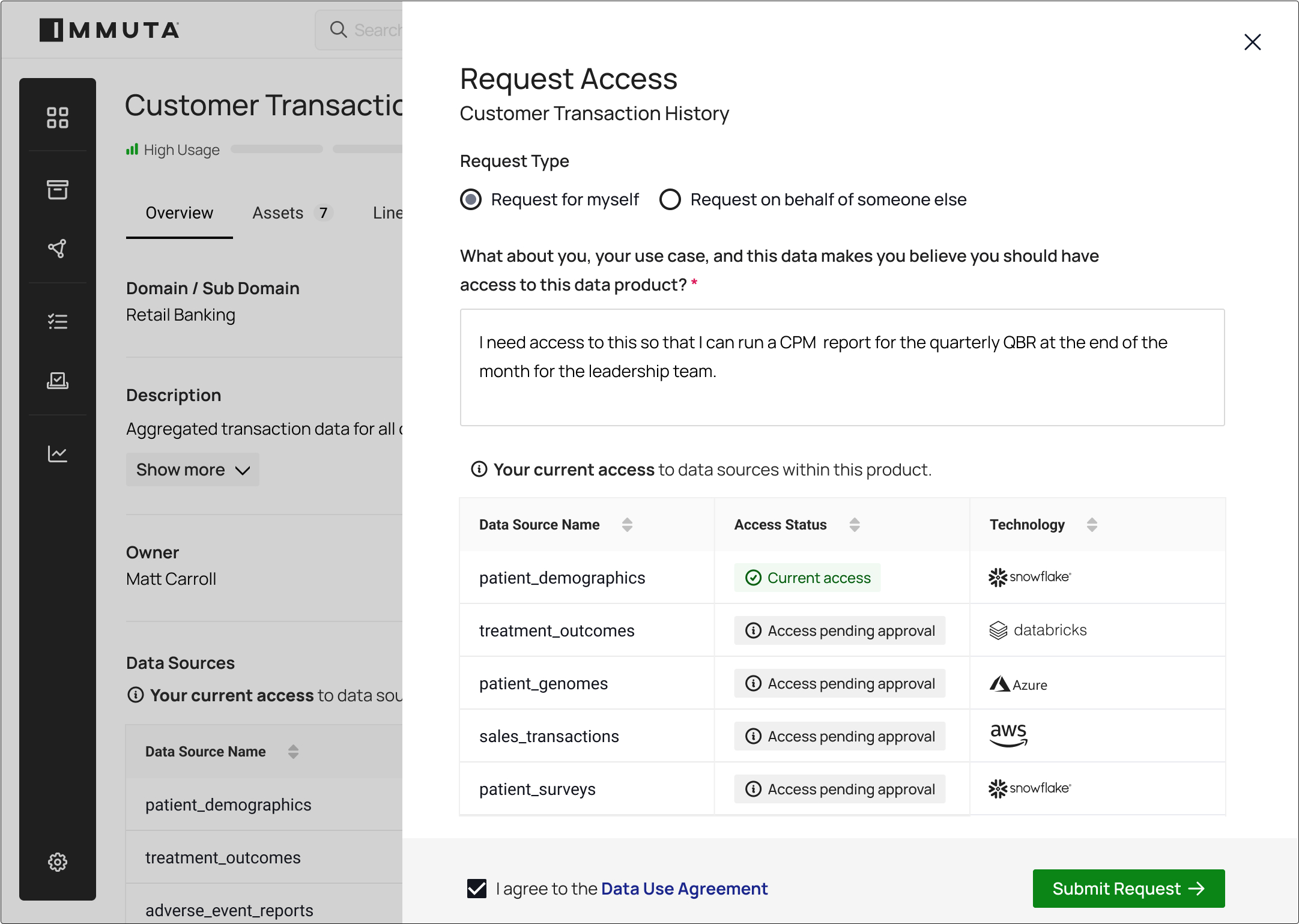

Streamline the approval of data access requests and automatically provision access based on data use agreements. Handle conditional approvals with time-bound access.

Simplify data governance with unified policy-setting across all your platforms — at scale. Automatically connect and classify sensitive data. Easily control access. And stay compliant through real-time monitoring and unified reporting.

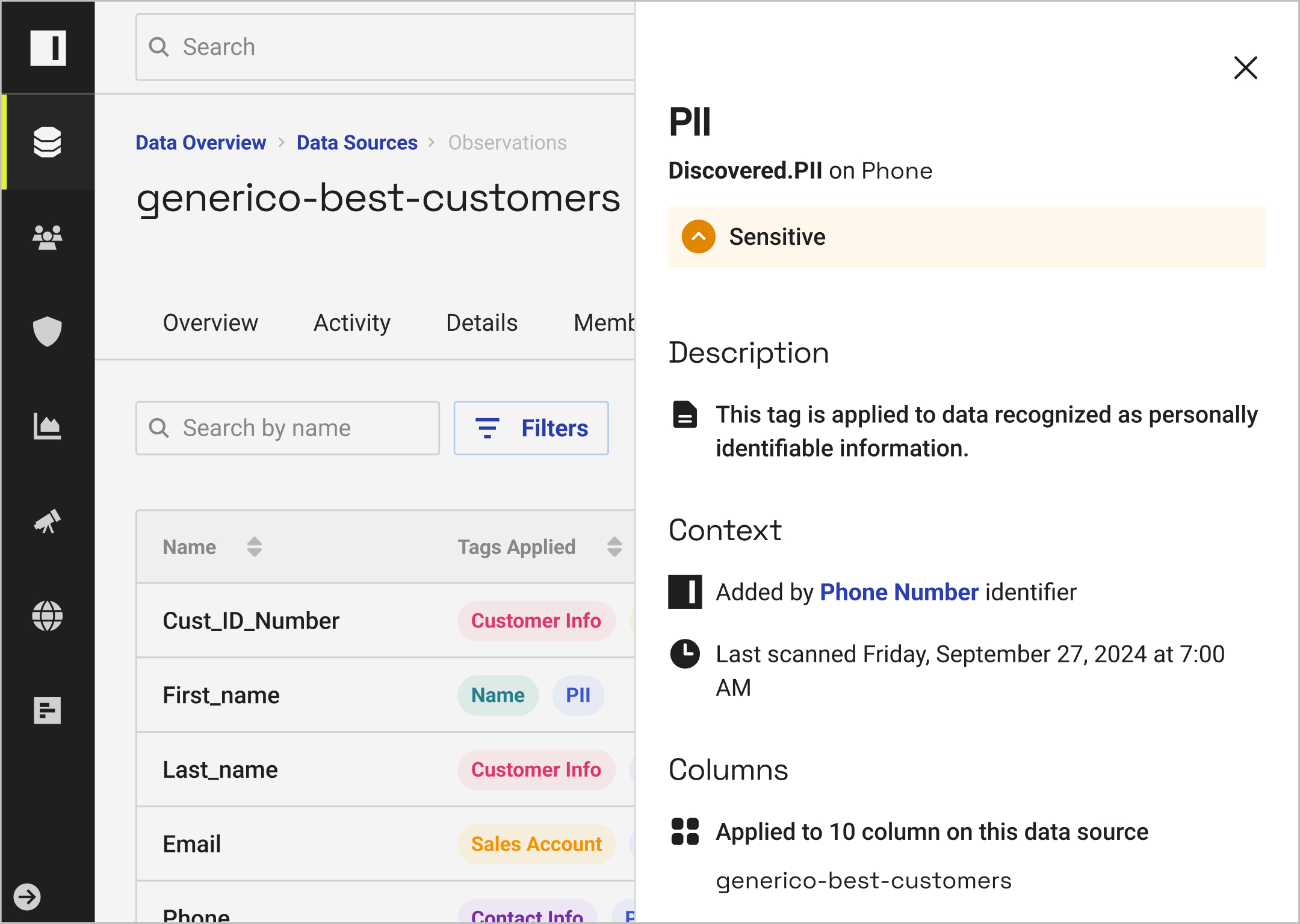

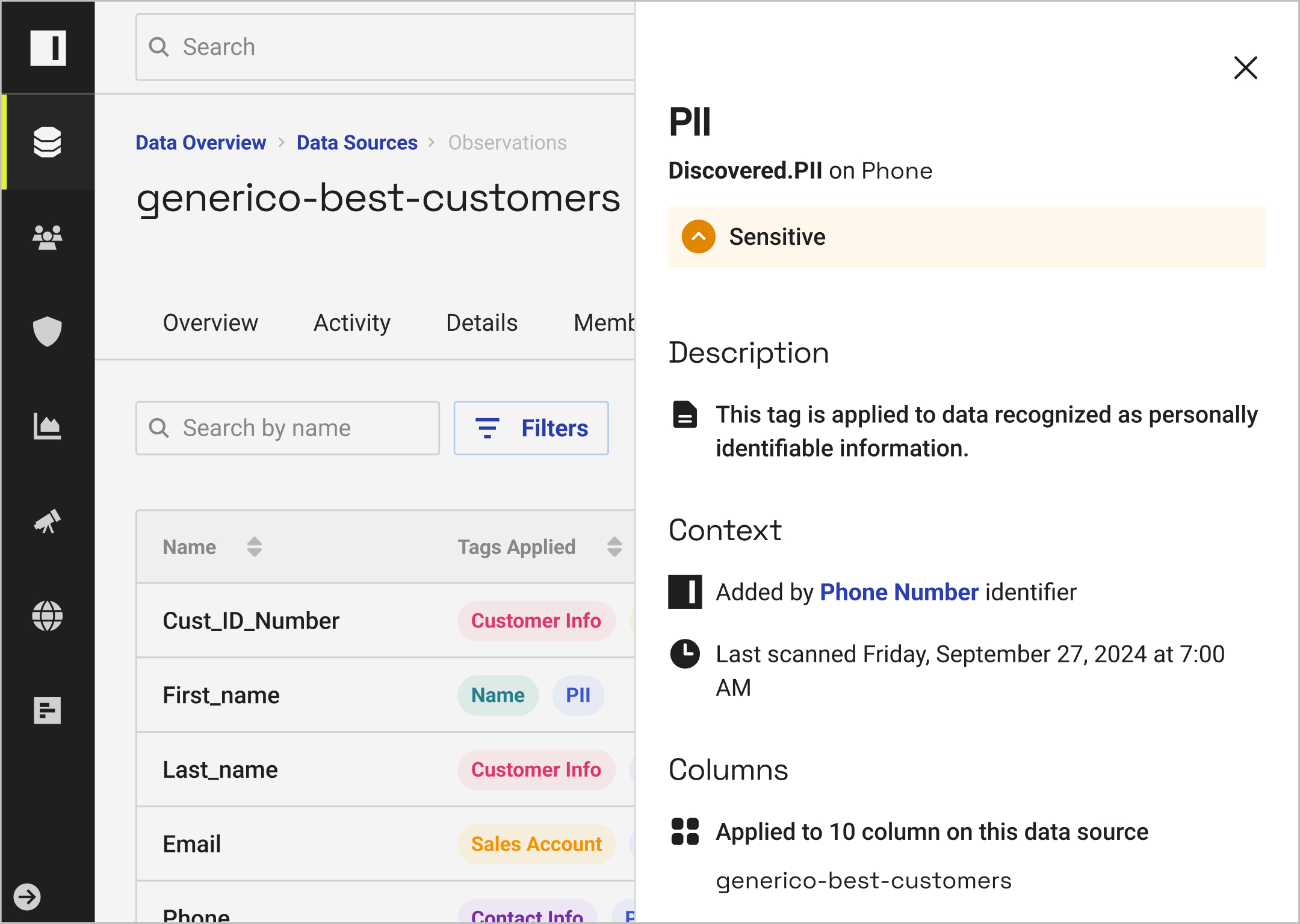

Automatically classify data and data products to drive policy enforcement and search — and manage tag integrations with other catalogs.

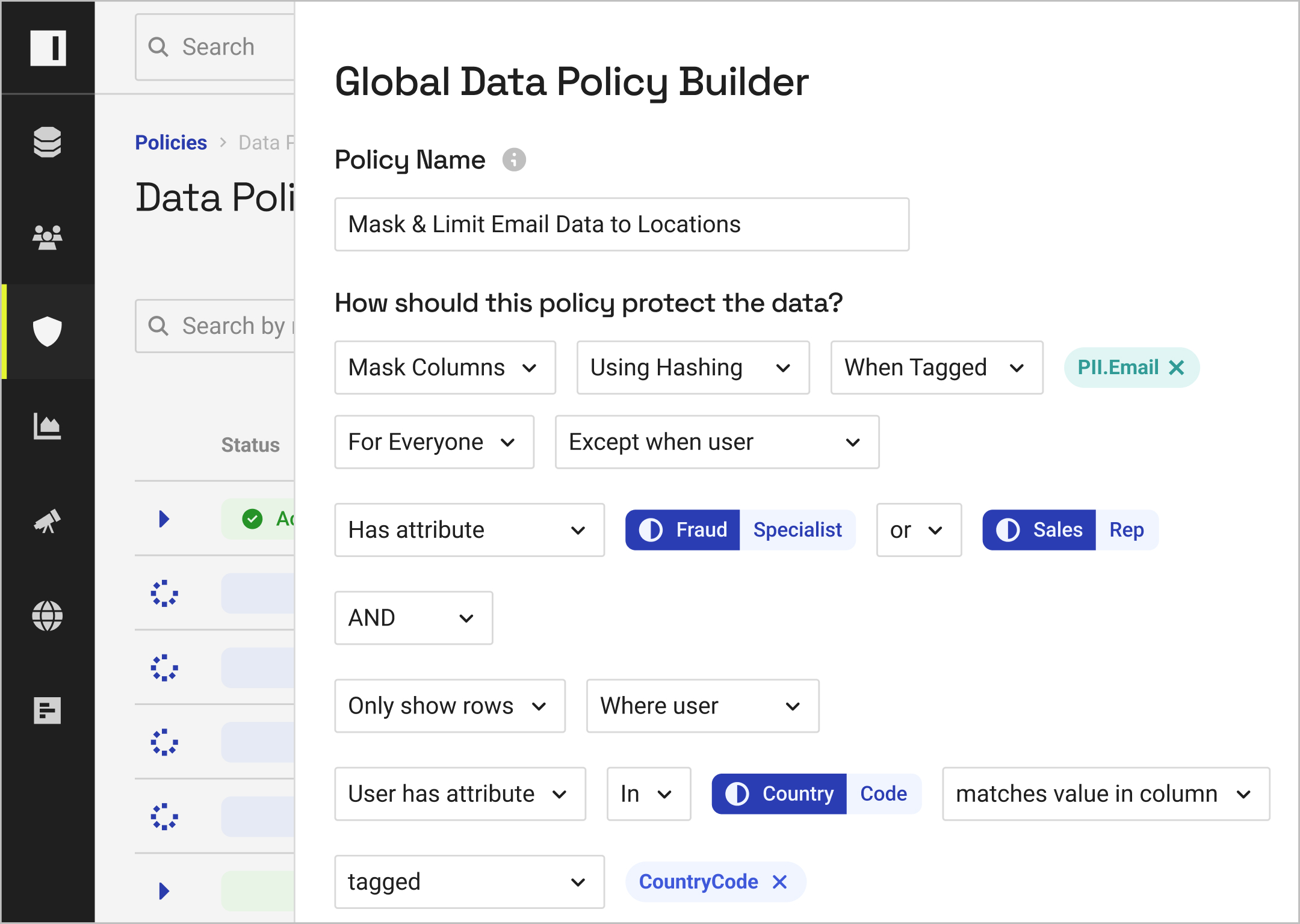

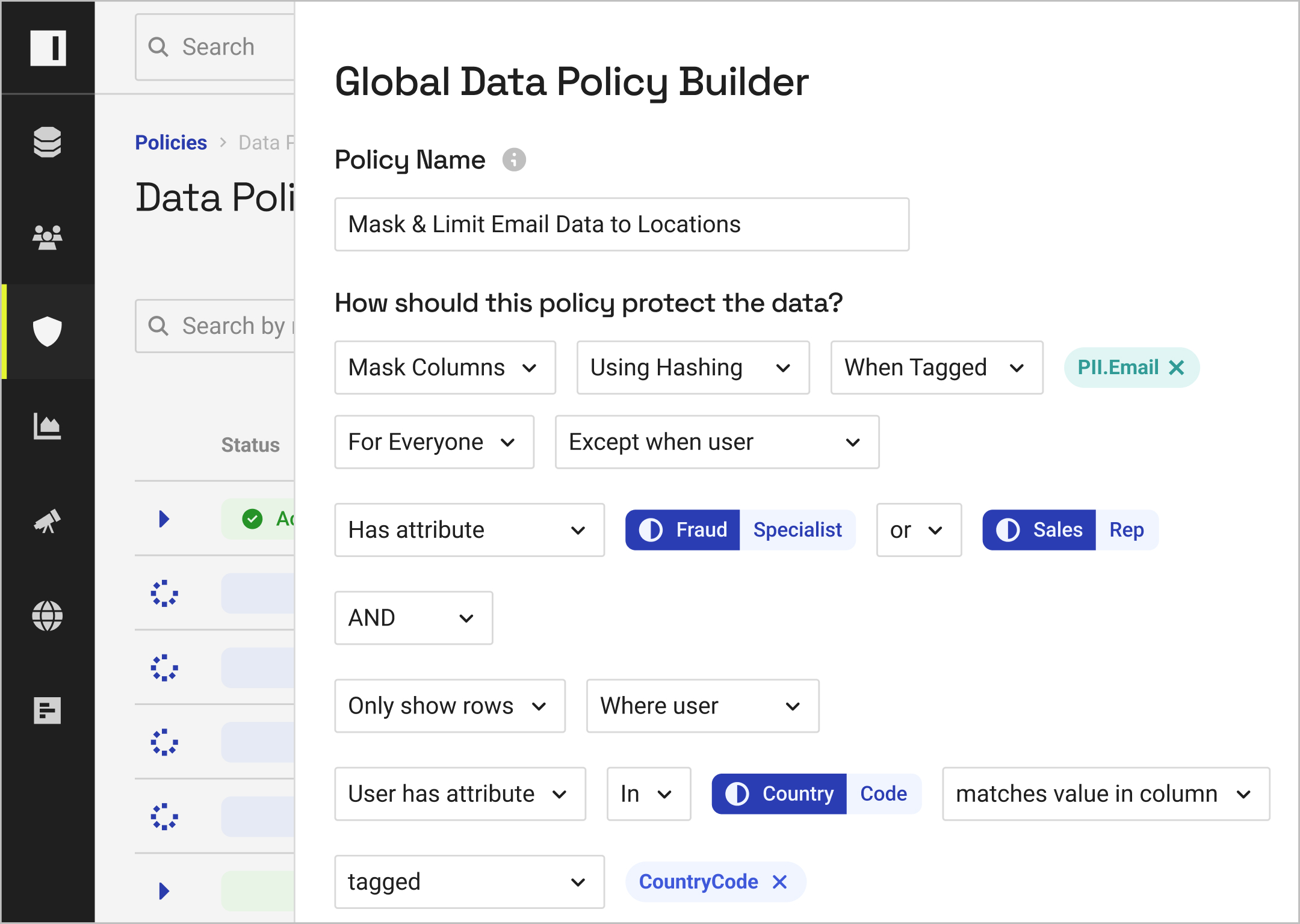

With the ability to test and build cross-platform data access policies in natural language, you can resolve policy bloat and reduce platform-specific efforts.

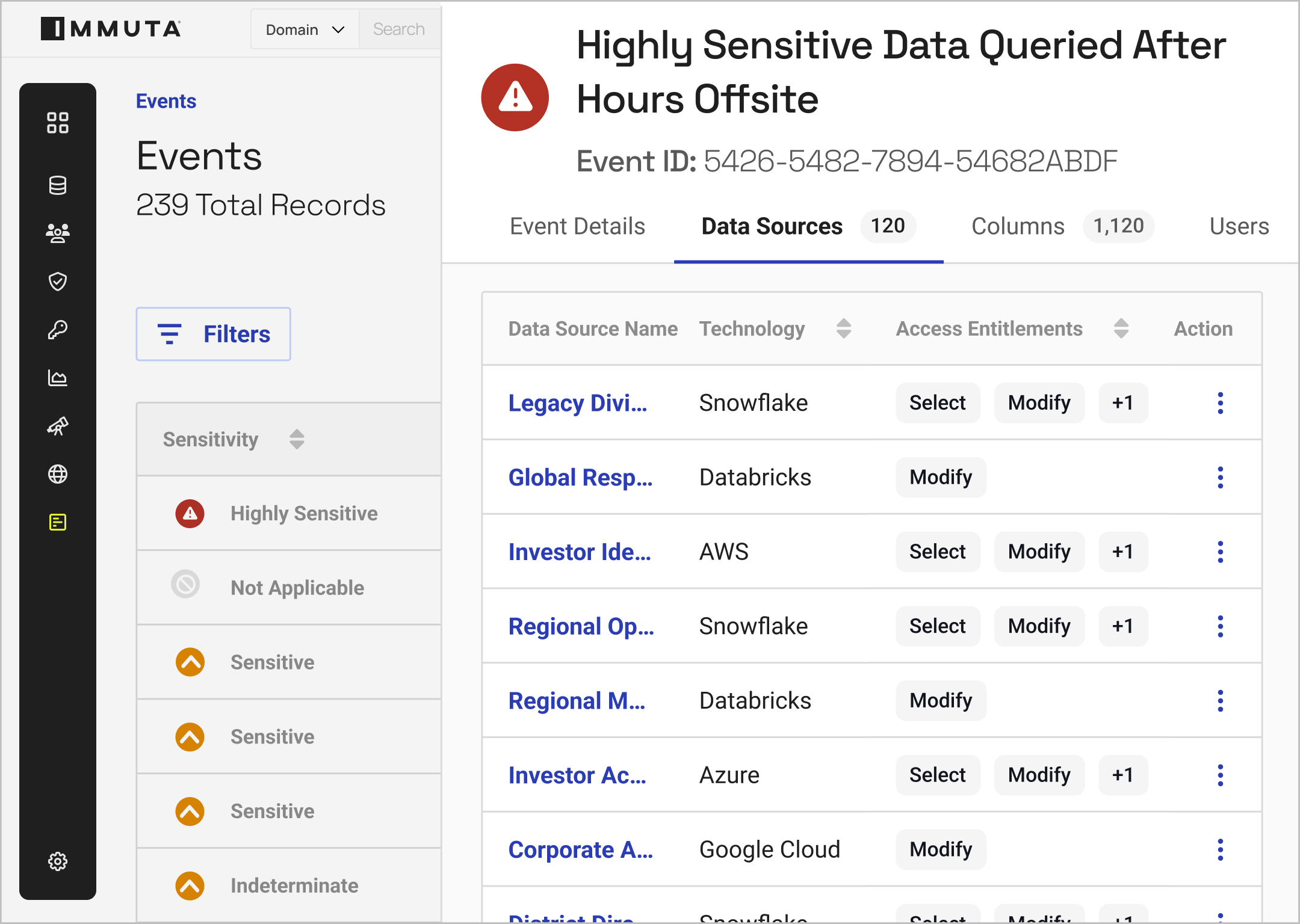

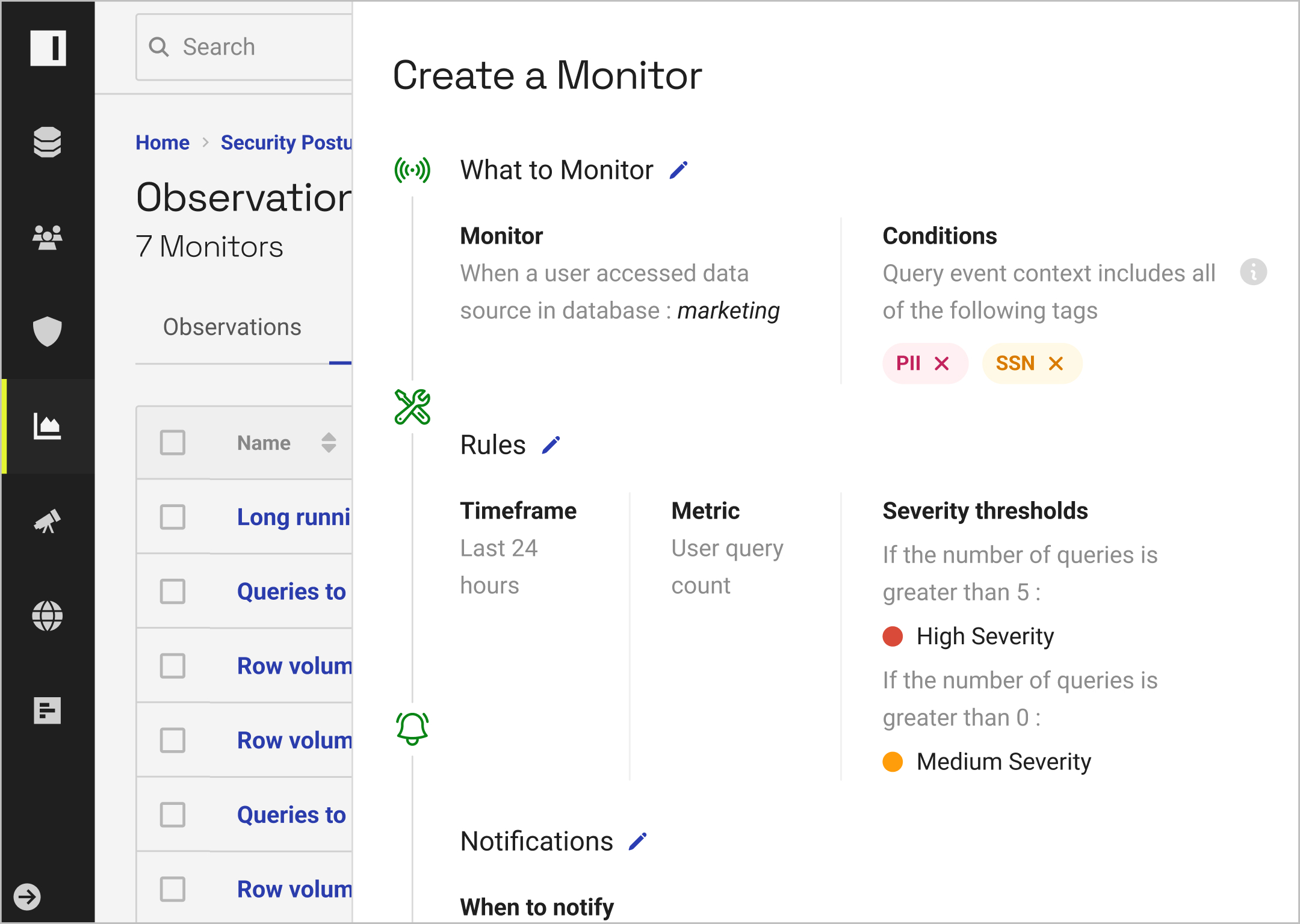

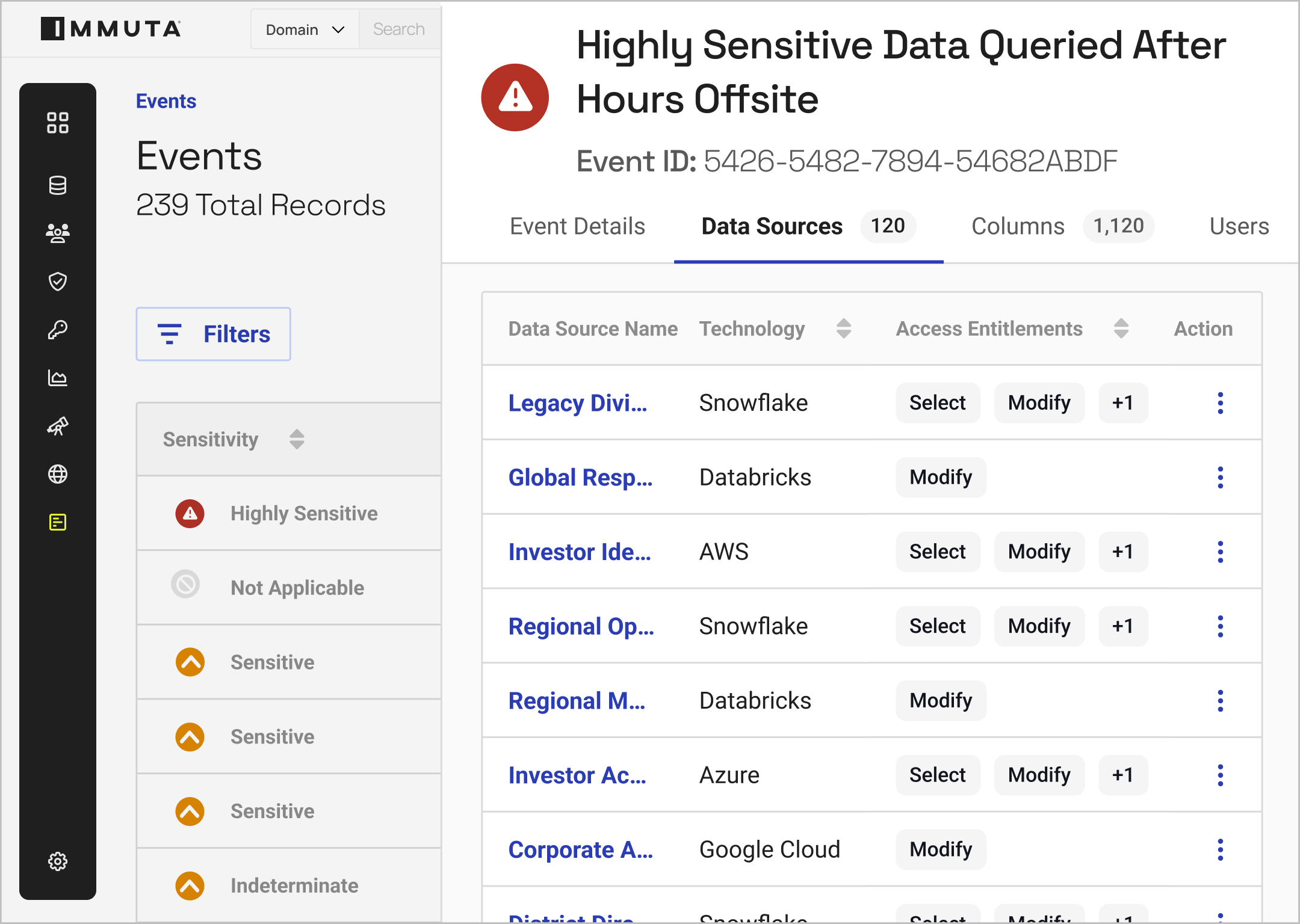

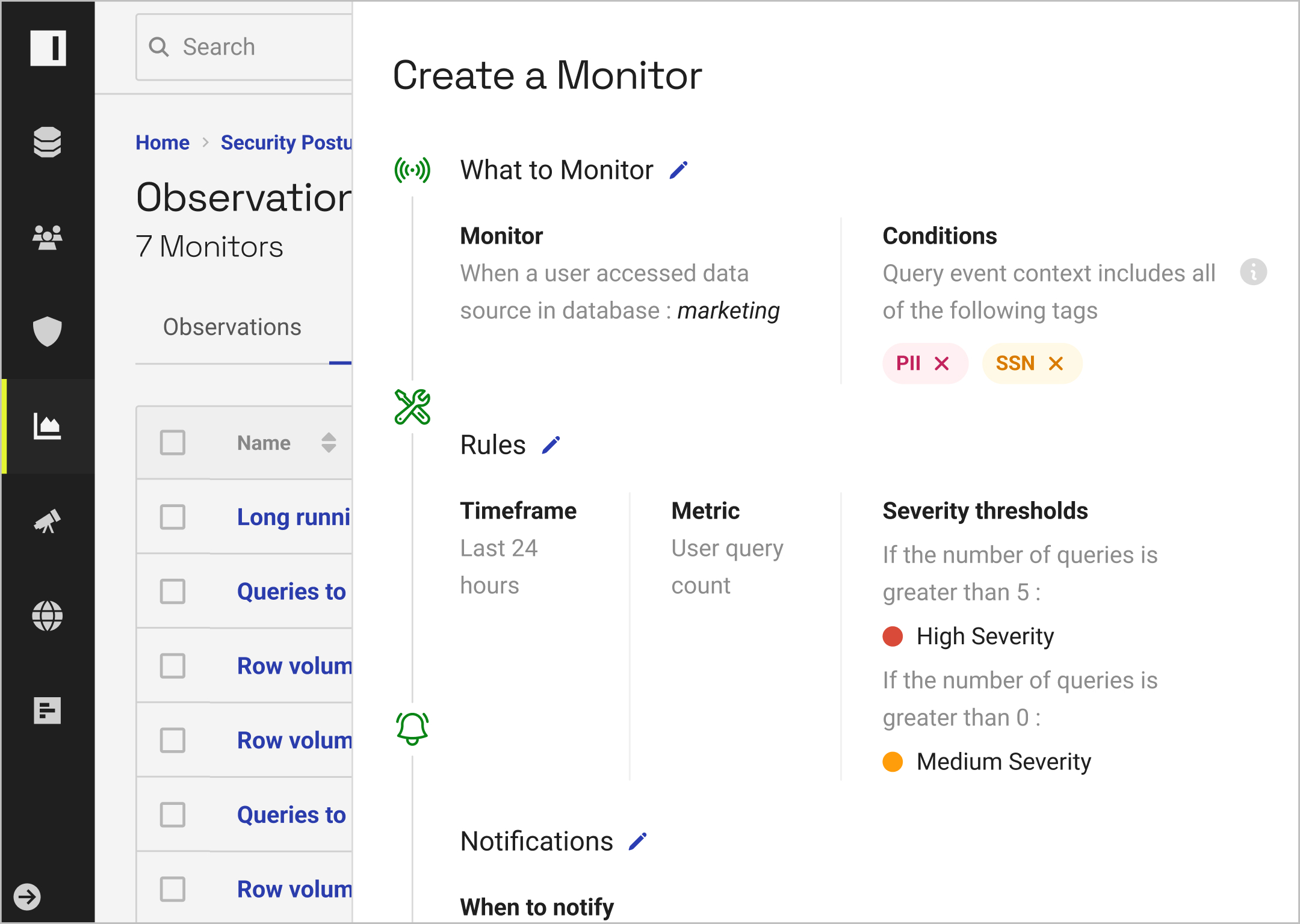

With real-time monitoring and unified reporting, you can understand how data is being accessed at any time.

By building reports based on policy outcomes and user activity, you can streamline compliance.

“With the support of Immuta and our other core partners, we have significantly increased our ability to make timely, data-driven decisions, empowering our employees to best respond to the challenges of the global pharmaceutical sector. ”

Pierre Alexandre Fischer, Data Platform Product Line Lead, Rochesaved annually by improving analytics on stock inventory and rotation.

new data products published with more streamlined processes.

Connect data and put it into action immediately — without increasing risk.

We partner with the world’s leading technology and solutions providers to offer best-in-class data access and governance for the modern data stack. And unlike other solutions, our platform operates natively in cloud data platforms — which means you don’t have to sacrifice visibility or performance.

Immuta is part of a modern cloud data ecosystem. Our key partners provide services like identity management, ETL, data intelligence, and analytics. Immuta is the data policy layer, pulling metadata from existing ecosystem solutions to enable granular control and visibility, accelerating the process of giving users access to data assets.

To enable fast, safe data access, Immuta integrates with:

Immuta enforces access controls at the data layer, so you can build and deploy RAG-based GenAI applications quickly, securely, and confidently.