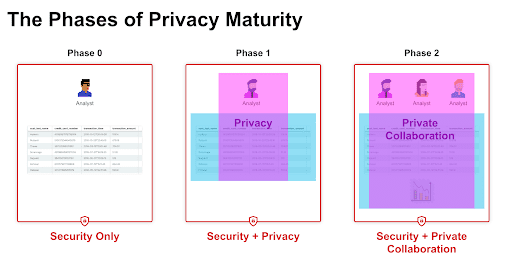

The worlds of security and privacy are converging, and data platform teams find themselves needing to enforce fine-grained access controls in order to avoid data leaks and manage data clean rooms. This convergence typically occurs in three phases, shown below:

Everyone is doing Phase 0:

Security. You limit Databricks access control to only your employees through authentication, typically through an identity management provider such as Active Directory or Okta. You also have your Databricks data plane within your own internal Azure or AWS account, adding an additional layer of network security.

Phase 1—Security + Privacy:

This is much more complex. It lies at the intersection of your data content and the context of who is using the data for what purpose. This requires you to categorize users with attributes and purposes under which they are acting and hide or mask data based on that. Sometimes this is done at the table-level, but most often it requires fine-grained access control down to the column-, row-, or even cell-level within a table. This is why you may use Databricks’ Table ACL or IAM Passthrough features for table-level access, or a tool like Immuta for fine-grained controls for table- and subtable-level.

Phase 2—Security + Private Collaboration:

This is the forgotten (and hardest) phase. It’s the phase that comes and punches you in the face as you are patting yourself on the back after you’ve completed Phase 1. Here’s the problem: Once you’ve applied the finer-grained privacy controls, you must now consider anything analysts WRITE out to the Databricks cluster as also sensitive. Here’s a quick example:

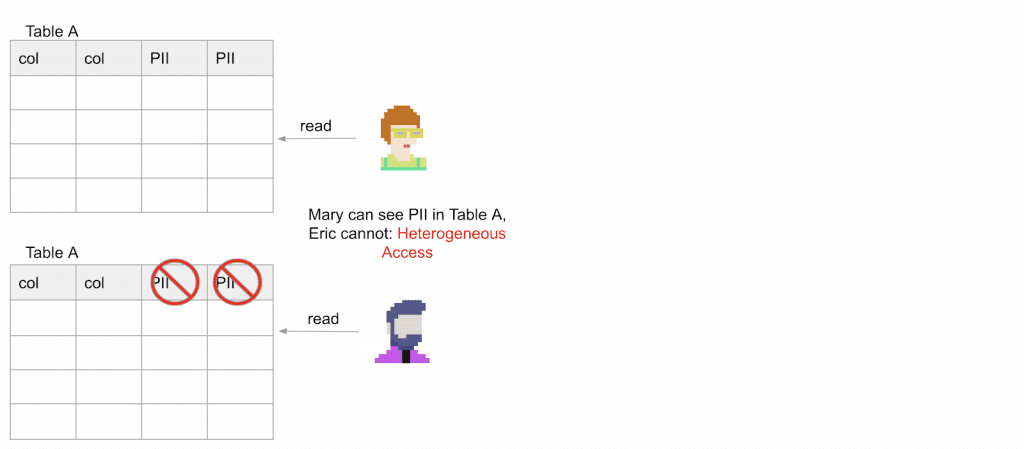

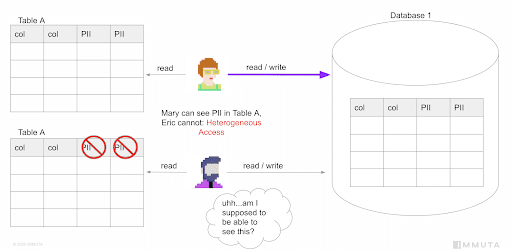

Take Table A, for example. Both Mary and Eric have access to it but PII is masked from Eric, whereas Mary has heightened access and is allowed to see PII from that same Table A. This is an example of Phase 1 controls and is what we term Heterogeneous Access. This is the foundation of Phase 1…but also the root of your headaches in Phase 2. Here’s why:

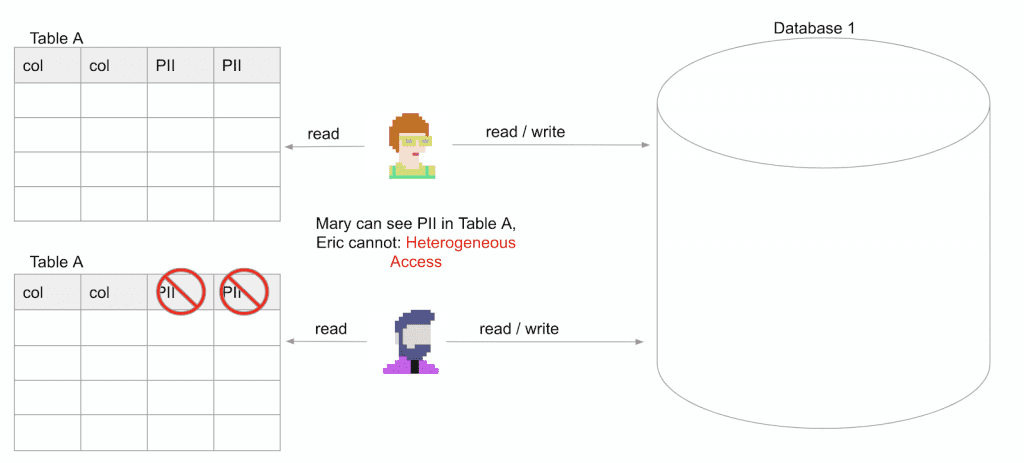

We now open a database, Database 1, to which both Mary and Eric have read/write access.

Now Eric does a transform against Table A to normalize one of the date columns and writes that out to Database 1. Mary can read that output and she’ll wonder why she can no longer see PII. This is a problem, but at least it’s not a data leak. We term this sharing up. Eric can see less than Mary, so he is sharing “up” to her. At this point, Database 1 can be considered a Databricks data clean room.

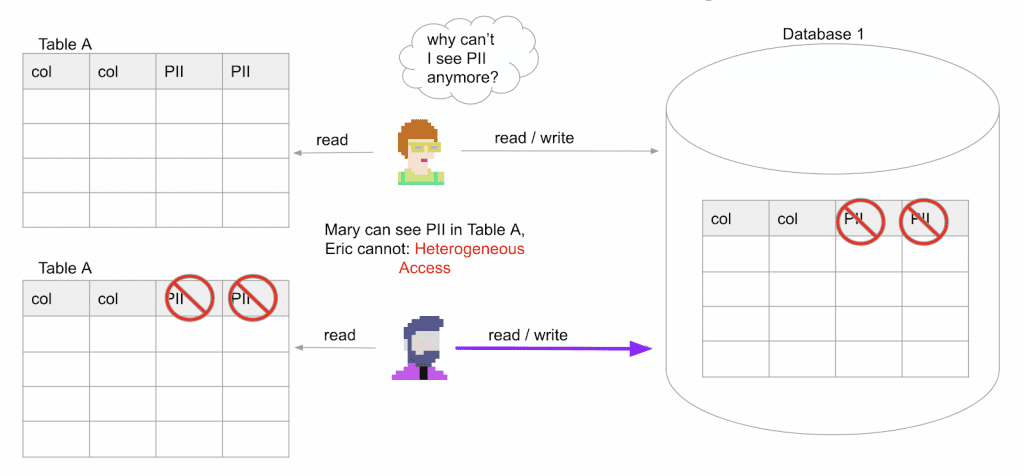

This next scenario is very bad – sharing down. In this case, Mary created a transform and wrote it out to Database 1. Now, when Eric reads her output, he sees the PII in the clear, resulting in a very serious data leak.

When you’re working in heterogeneous environments (like Phase 1), if you intend to let analysts write data back to the cluster (ADLS, S3, DBFS), you will have this problem even if you are only doing table-level controls. You must consider the entire READ universe of the analyst in combination with the READ universe of every other analyst with WRITE to that database before GRANTing them READ/WRITE to the database. And this must be future-proof. If you GRANT one of those analysts READ to some other table in the future, you must consider everywhere they have WRITE.

This is the conundrum of Phase 2. I’ll repeat, you have this problem if you are doing any level of Phase 1 (even just table controls) and you intend to let your analysts write data. The complexity increases the more complex your access controls. Do you feel like someone punched you in the mouth yet?

How Immuta Helps Manage Databricks Data Clean Rooms

Immuta not only provides a solution for Phase 1 but, equally important, solves the “share down” problem of Phase 2 as well, so data teams can work collaboratively and securely in Databricks data clean rooms. We are able to do this through the Immuta Data Security Platform‘s Projects, where data users can share and collaborate on equalized data sets. Projects create logical groupings of members (the analysts) and tables, and are also self-service.

Let’s consider a scenario.

Eric decides today he needs to work with Mary. With Immuta, he can simply create a new Project, add Table A to it, and add Mary as a member. When Eric creates that Immuta Project with Mary, two critical things occur:

- A new database is created in Databricks associated with the Project Eric created. Read/write to that database is limited to only the Project members — in this case Eric and Mary.

- The project is “equalized.” Equalization takes the intersection of all project members’ access—as compared to the table policies—and homogenizes them, effectively creating a Databricks data clean room. In our example, this means Mary loses access to the PII columns in Table A because she’s been equalized to Eric’s level in the Project. Note that equalization can be updated if new members join the Project or if tables with different policies are added.

But what if Mary still needs to see PII when not working with Eric in the Project?

This is solved through “Project switching.” Mary (or any Project member) can alert Immuta to which Project they are currently working under. Project switching has the following effects:

- It allows Mary to see her current project database (the one she can write to) in Databricks. In other words, when she lists databases, she will not see the Project database she has write access to until she is acting in that Project (by switching to it).

- It also restricts access to only the tables in the project. If Mary were to list tables, she would only see the one Table A because it is the only table in the Project. This guarantees that data—which was not considered part of the equalization—can never be written to the Project database.

- It equalizes Mary down to Eric’s level.

If Mary switches out of the Project to “no Project,” she will now see PII in Table A again — as well as tables outside of the Project—but will no longer see the Project database she used to be able to write to in Databricks. In other words, she can read all she wants, but she has nowhere to write until she is in a Project. Remember, creating Projects is self-service.

This completely solves the Phase 2 problem without any intervention at all from an administrator, and allows full multi-tenancy on your Databricks clusters. It is also possible to do this same Project workflow for EMR, Cloudera, and Snowflake data access.

But wait! What if Mary did some great feature engineering in the Project and she wants to now share that outside of the Project with other analysts or even see that output herself when working outside of the Project? Immuta also has the capability to create what are called derived data sources.

To create a derived data source, Mary registers her created table within the Immuta Project (this is a separate permission allowing analysts to register derived tables) and that table will inherit the appropriate policy. For example, we know for a fact that PII was hidden from anything Mary created in the Project, so we can open that derived data source to anyone. Had the equalization level allowed for the ability to see PII (if Eric wasn’t in the Project), then the policy would have restricted access to the derived data source to only users that can see PII. This allows data sharing without involving an administrator — and delivers complete policy automation.

In conclusion, when moving into Phase 1 of the privacy maturity spectrum, you must consider Phase 2 as well or you will stifle collaboration in your data platform. Immuta solves this elegantly through our Immuta Projects, and streamlines your ability to manage Databricks data clean rooms. You can see an in-depth demonstration of this capability here.

Interested in exploring Immuta and the capabilities we just discussed? Request a demo today.